Personalization Strategies to Accelerate Conversion Rate

Once companies have established a functional system for marketing, personalization is typically the next big leverage point to increase their return on investment. Successful personalization can have a significant positive impact on conversion rate and client satisfaction. But how should you implement this idea – what’s the best way to tailor your marketing to the different segments of your audience?

Below are a few essential personalization strategies that can improve your funnel’s efficiency and make your audience feel like you genuinely understand their needs and concerns.

Update Your Personas

Before personalizing something for a specific audience, you must understand them. Most companies don’t make it a regular habit to review and update their buyer personas. A deep understanding of personas is vital if you want to succeed with personalization. Take some time to refresh your knowledge of each buyer persona you’ll be targeting. It may reinforce what you already know, but in many cases, you’ll uncover new trends or ideas related to a specific segment that might help with personalization. For example, is there a significant new industry regulation that one of your personas must now consider as they make decisions?

Even seemingly minor factors can provide insights that translate to more successful marketing. According to HubSpot, addressing concerns on a landing page can increase conversions by as much as 80%. Best of all, the information you learn will be applicable across different funnels and campaigns in the future.

Get Input From Users

It’s always easy to speculate in a vacuum about what users want or how they prefer to receive information. But the best way to understand your target audience is to hear it directly from them. That means talking to current and previous clients about a few significant concerns, including:

- What are their biggest challenges at work?

- Have any of those challenges changed since they started as a client?

- What would make your job easier for the next month/quarter/year?

- How helpful have you found other solutions in our industry?

Notice that these questions are more general inquiries about their work and business functions and don’t have anything to do with the specific nature of your offering. While getting feedback from your users about particular points of your service or product is always important, asking them for feedback about your product or service while requesting updated details about their professional needs can be overwhelming.

User surveys are valuable for personalization, but you must keep them concise and tightly focused. Share on XBeyond that, offering some reward or compensation to users who provide feedback that enhances your personalization efforts can also be helpful. It shows you value their time and the insights they provide, even if it’s just a percentage discount or a small gift card. At the very least, you should explain how you’ll use their feedback to make your offering better and more helpful to users like them.

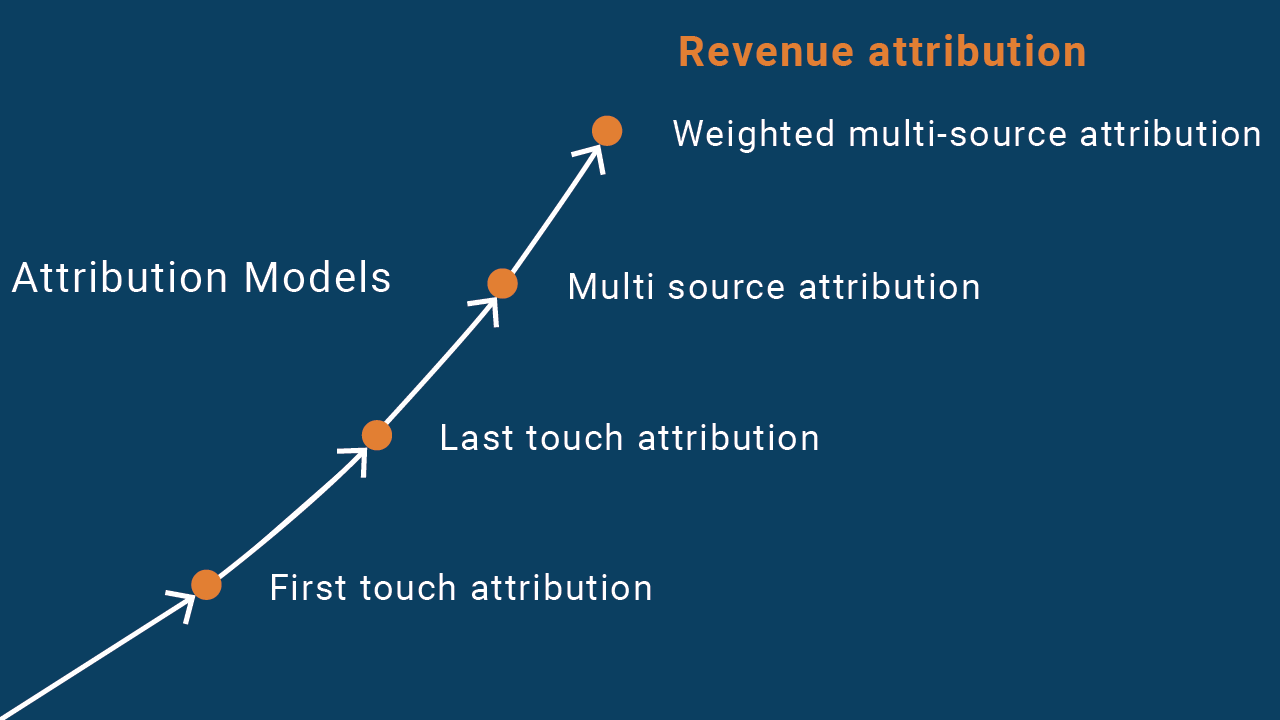

Source Data From Different Business Functions

Many marketing teams face a similar challenge: siloed data. Instead of understanding the lifecycle of a customer from prospect through to purchase and retention, they can only interpret the needs and concerns of prospects through the lens of marketing actions – whether or not they convert, how long they stay on a website, their job titles, etc. The problem with this approach is that it ignores crucial elements of the customer journey that are vital to the understanding necessary for personalization. Customer interactions outside of marketing can provide a richer picture of what they are looking for from your offering.

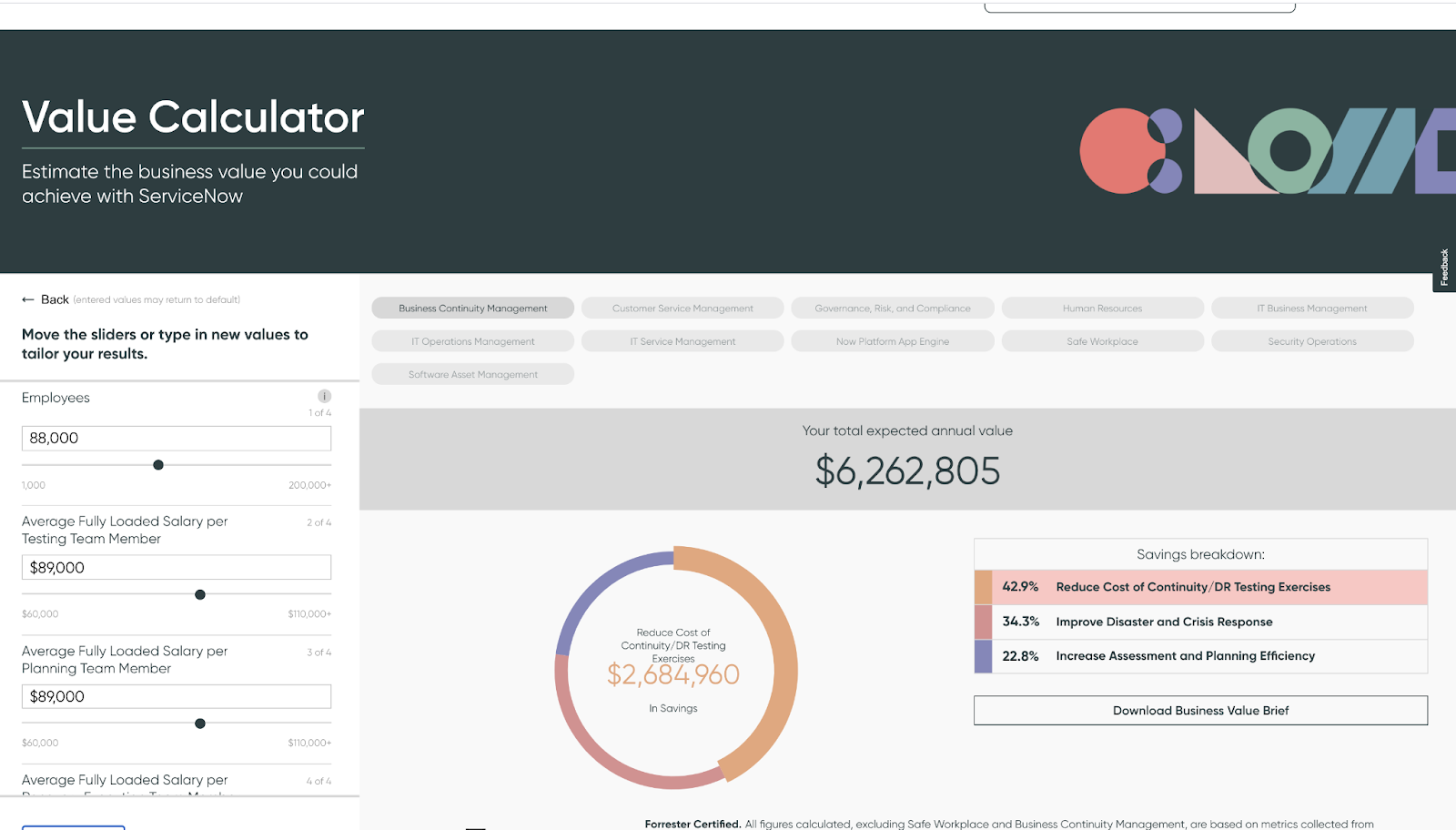

For example, let’s say you provide a software solution that helps mortgage companies streamline their internal operations. It’s valuable to know what kind of landing page, emails, and advertisements will get the attention of prospects and convert them into subscribers or customers of your business. Suppose you can access reports on their interactions with customer service or data on which areas of your tool they spend the most time. In that case, you can use this information to personalize marketing messages in your funnel further.

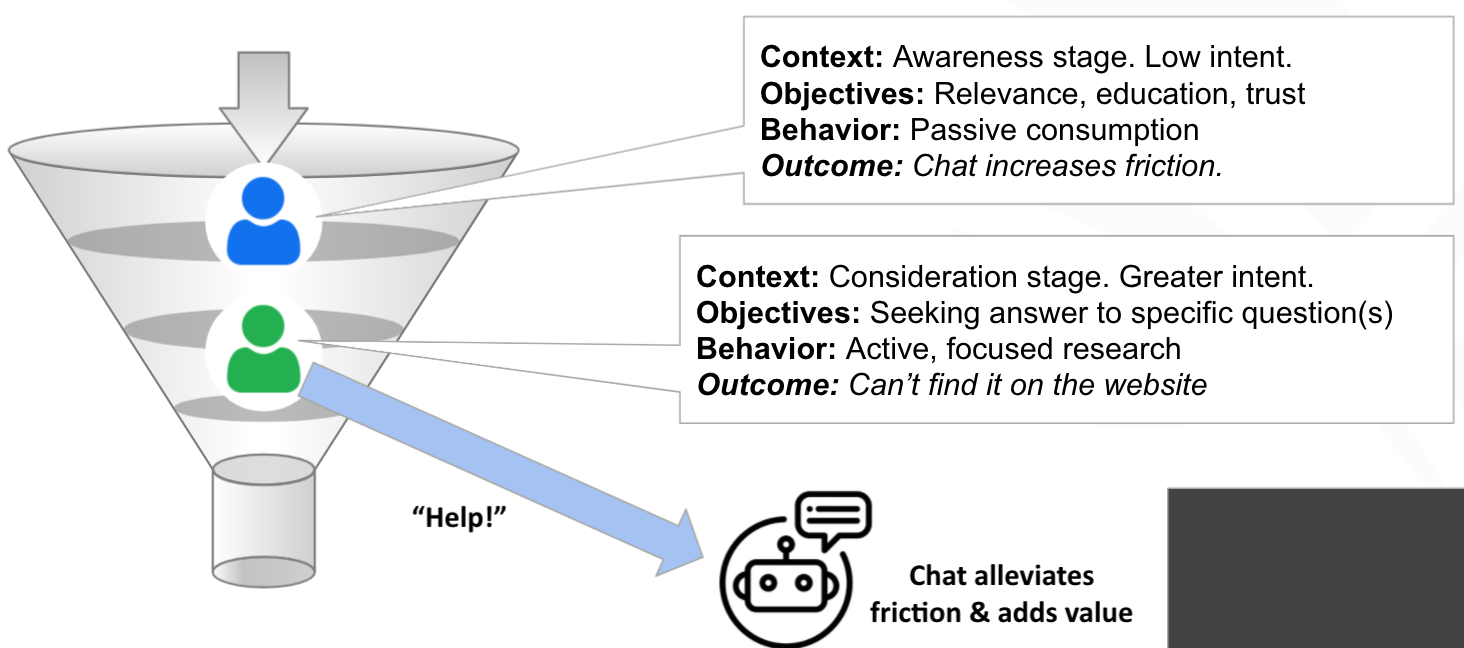

Focus on Key Conversion Points

When personalizing your marketing funnel, some areas are more critical than others. For example, personalization matters on a confirmation page that appears after a user converts, but it’s essential to landing page content that helps convince visitors to take the conversion action.

As you build a strategy for improving your funnel’s personalization, you want to emphasize high-leverage points that give you the most return on the time and money invested. In some of these areas, a few small tweaks in your content and visual design can be responsible for exponential growth in conversions.

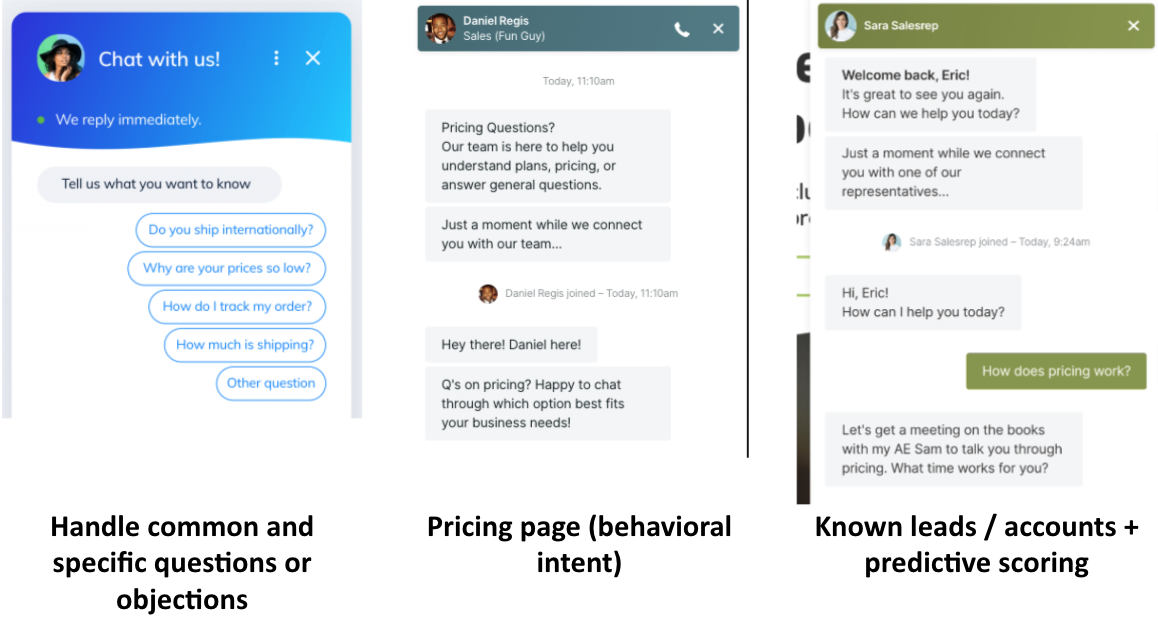

What are some of the critical areas to think about when it comes to conversions? It varies depending on your offering and funnel, but the most common ones we work with on client engagements include:

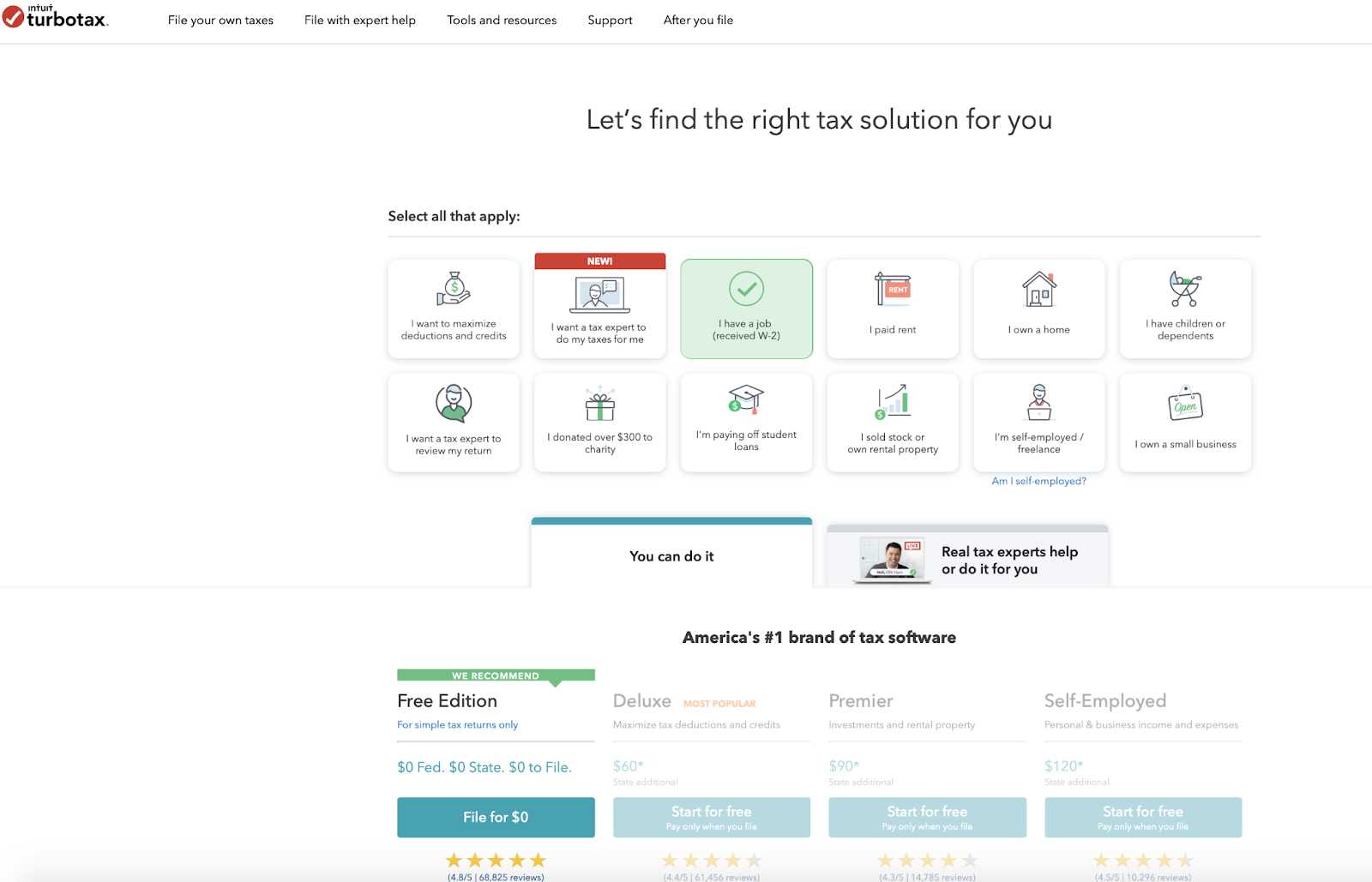

- Landing page forms, including things like headlines, body copy, and field text. An easy solution here is running A/B tests that pit one version of a page against another, but there are plenty of other options for improvement if you have the right data.

- Social media ads that target your audience on networks like Facebook, LinkedIn, and other platforms. These are important because they are often the first interaction prospective customers have with your business. A successful ad will improve brand awareness and drive conversions towards your goal.

- Drip emails that nurture clients and prospects towards signing up for your service or purchasing a product or other offering. In most B2B sales cycles, prospects will want to spend some time getting to know you before even agreeing to schedule a meeting – let alone committing to a significant, high-priced purchase. If appropriately composed with the correct elements (subject line, preview text, and body content), drip emails can be one of your most effective sales tools.

Final Thoughts on Personalization

Even in niche B2B markets that don’t represent some of the fastest-growing sectors in the world, professionals responsible for business buying decisions face a lot of noise and uncertainty. Faced with many different options and marketing messages on various platforms, buyers have no choice but to focus entirely on the solutions that seem best for their specific needs.

While it can be harder for marketers to break through to prospects and pique their interests, the scenario also creates an opportunity. A well-calibrated message that speaks to prospects’ needs and pain points can cut through a lot of that noise and resonate immediately with the right audience. That means increased conversion rates, more significant revenues, and ultimately, a more satisfied customer base that stays with you for longer.

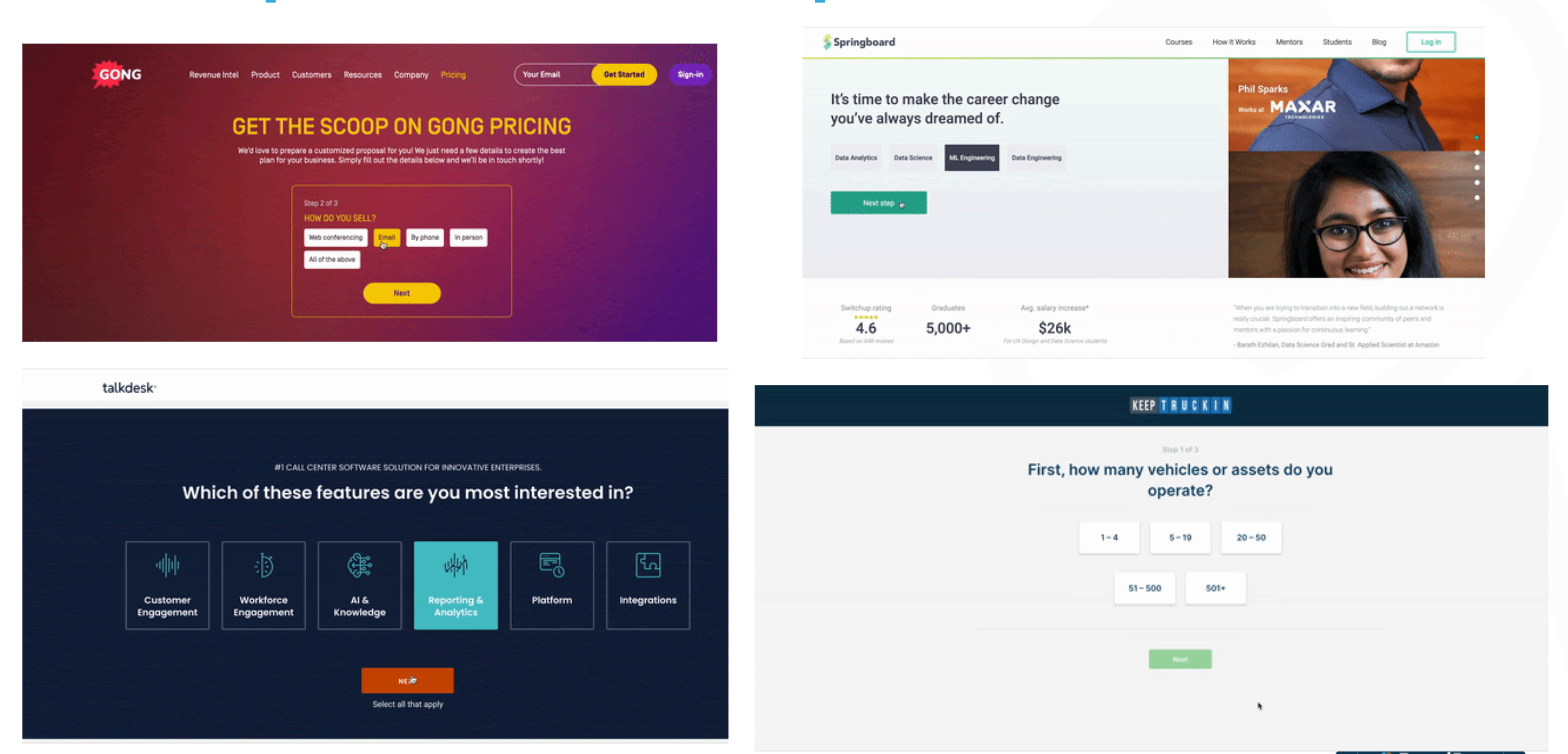

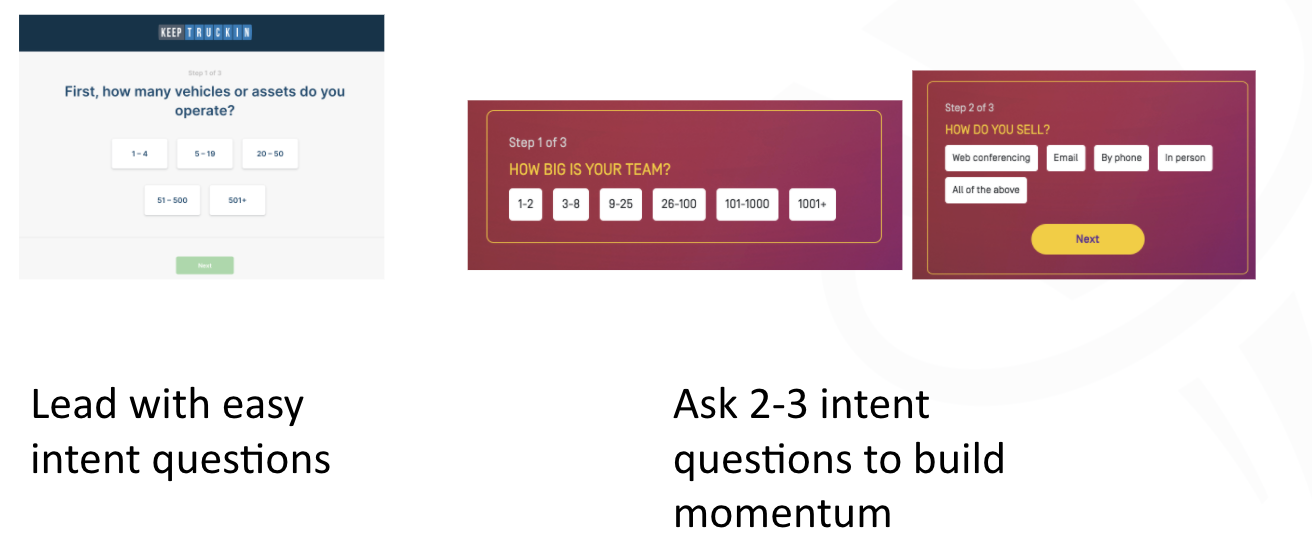

Looking for some help from conversion rate optimization specialists on personalization and how to apply it to your funnel? Fill out this quick quiz to see if our services might be a good fit for your requirements.