Funnelenvy vs A/B Testing

FunnelEnvy vs A/B Testing – At A Glance

|

FunnelEnvy |

A/B Testing |

|

|---|---|---|

|

Optimization Approach |

Predictive |

Winner Take All |

|

Down-Funnel Optimization |

|

|

|

Scalable without Technical Resources |

|

|

|

Customer Data Platform |

|

|

|

Multi Test Pages |

|

|

|

Experimentation Audiences |

|

Limited |

Optimization Approach

FunnelEnvy: AI driven predictive

FunnelEnvy: AI driven predictive

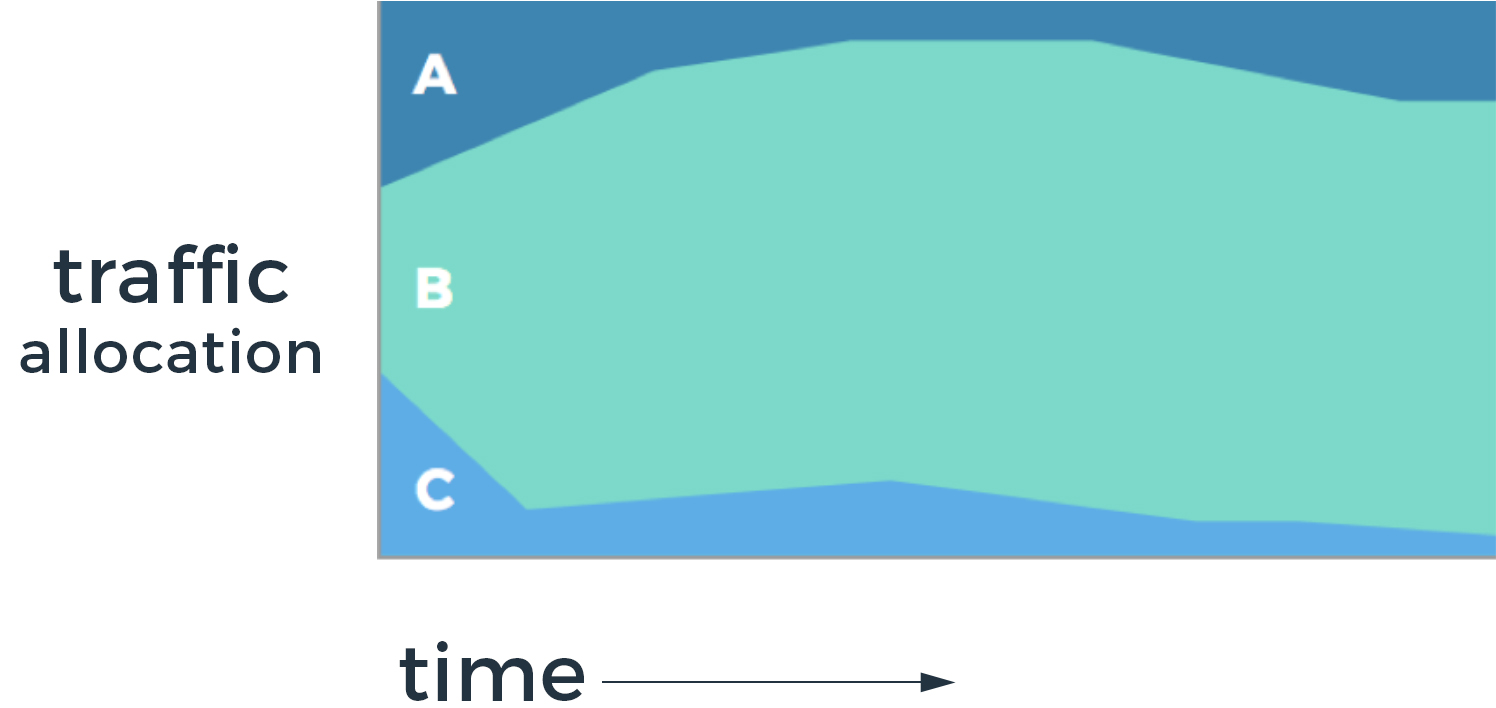

With FunnelEnvy, marketers set up experiments and AI determines optimized experiences on a 1:1 basis. While experiments are initialized with even traffic allocation, the platform is continuously iterating to maximize conversions.

In the above example, traffic is initially allocated evenly. As time progresses, our predictive system determines which individuals should see which variation. This is in contrast to an A/B testing approach that allocates winning variations 100% of total traffic.

This results in:

- Increased down-funnel Conversions. Experiences are personalized for every visitor and where they are in your funnel.

- Reduced resource constraints. Simply set up tests, and let our AI analyze and iterate to determine who sees what.

- Saved time. Don’t wait for tests to reach statistical significance. Our AI will begin allocating traffic in experiments as soon as you launch them.

A/B Testing: Static ‘Winner Take All’

A/B Testing: Static ‘Winner Take All’

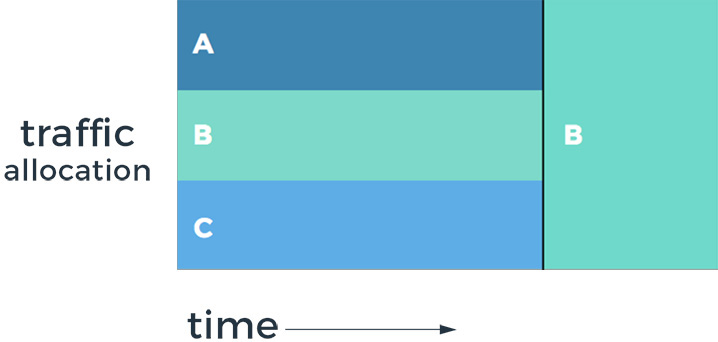

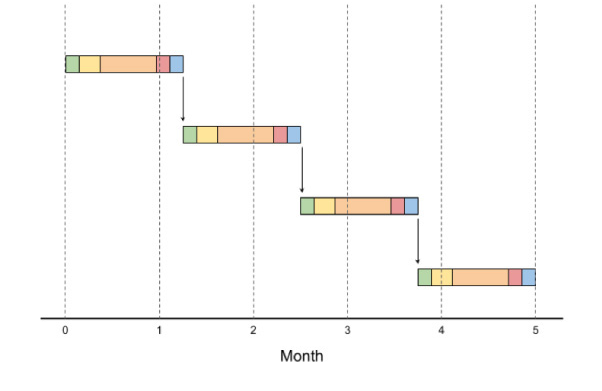

When A/B testing, traffic is split evenly across the duration of a test. Once a test reaches statistical significance, 100% of traffic gets pulled into the winning test.

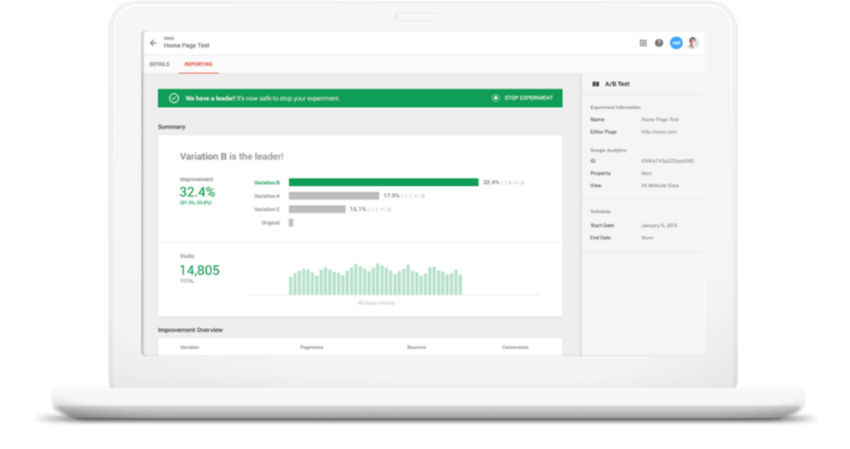

False assumption that ‘B’ should receive 100% of traffic.

Live example from Google Optimize where 100% of the experiment audience will see variation ‘B’.

For B2B, this has a number of major issues impacting success:

1. It doesn’t optimize the KPIs that matter well – Experimentation doesn’t easily accommodate down-funnel outcomes (revenue pipeline, LTV) or the complexity of B2B traffic and customer journey.

2. It is resource-intensive to do right – Ensuring that you’re generating long-term meaningful business impact from experimentation requires more than just the ability to build and start tests.

3. It takes a long time to get results – Traffic limitations, achieving statistical significance, and a linear testing process makes getting results from experimentation a long process. In fact, 4 out 5 experiments never reach statistical significance!

4. Not meant for B2B – Using a ‘winner-take-all’ approach means every customer sees the winning variation. In B2B however, we know that no customer is alike. There are different levels of engagement, industries, software stacks, etc. By assuming a single winning experience is optimized for EVERY customer, marketers are leaving conversions on the table.

Down-Funnel Optimization

FunnelEnvy: Yes

FunnelEnvy: Yes

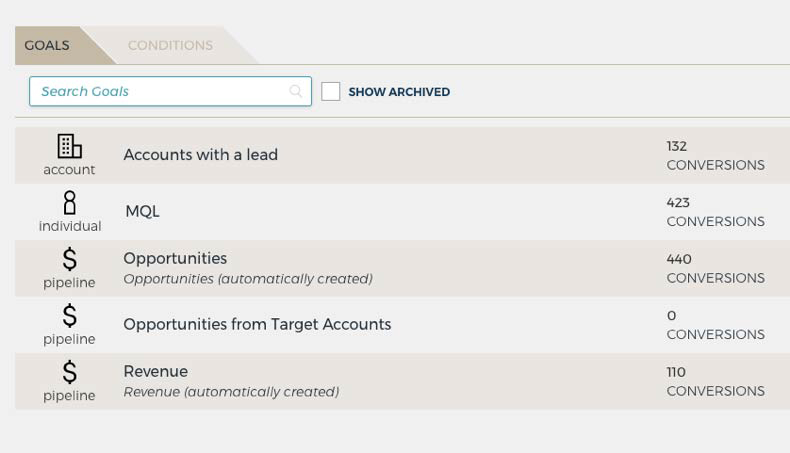

Simply pick a down-funnel goal (MQLs, SQLs, or even revenue) you want to optimize for and our AI engine will dynamically serve the experiment variations that influence those goals. With attribution reporting built in, marketers can directly see the correlation between experiments and realized lift.

A/B Testing: No

A/B Testing: No

With A/B Testing, winning variations are determined by on-site activity like form-fills or click-throughs. While this is a valuable outcome for B2C, B2B websites don’t often have a correlation between top of funnel activity and down-funnel revenue. This leaves testing as an interesting exercise, but not one that’s tied to value.

Scalable Without Technical Resources

FunnelEnvy: Yes

FunnelEnvy: Yes

With AI determining optimized experiences for each customer, there is no need for technical resources. Marketers can simply set up experiments and let our platform handle the rest.

A/B Testing: No

A/B Testing: No

Scaling a successful testing program with A/B testing typically requires hiring 4+ team members. These include:

1. Data scientists to analyze test results and determine subsequent tests

2. Full stack developers to integrate your data

3. Marketers to prioritize tests

4. Front end developers to implement code.

Customer Data Platform

FunnelEnvy: Yes

FunnelEnvy: Yes

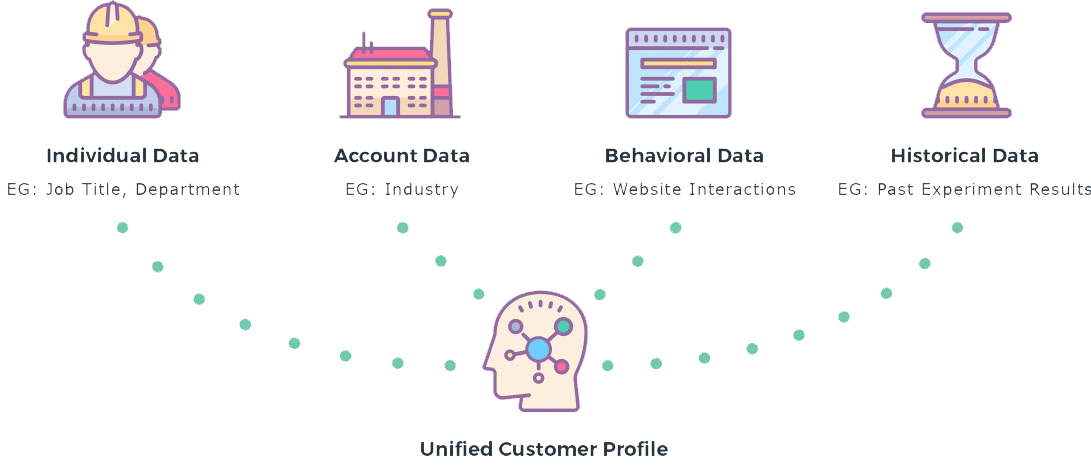

FunnelEnvy is an out of the box CDP platform. We unify customer data from popular 3rd party data sources (Kickfire, Segment, Clearbit) and key 1st party data sources (CRMs, MAPs), among others.

By unifying lead and customer data, our predictive platform has additional context to optimize experiences on a 1:1 basis.

A/B Testing: No

A/B Testing: No

A/B testing platforms don’t integrate dispersed customer data into unified profiles. Attempting to create an ad-hoc CDP in an A/B testing environment would require significant coding.

Multi Test Pages

FunnelEnvy: Yes (Continuous Optimization)

FunnelEnvy: Yes (Continuous Optimization)

FunnelEnvy uses a 1:1 continuous optimization approach that can isolate the impact of experiments even if they are on the same page.

A/B Testing: No (months to declare winning tests)

A/B Testing: No (months to declare winning tests)

By evenly allocating traffic until a winner is declared, marketers have to wait for tests to complete, results to be analyzed and discussed before there is substantive evidence to inform the next hypothesis.

While tests can be run in parallel, for any given set of hypotheses it’s a sequential effort that requires learnings be applied linearly. The result is an average of 10X less experiments being able to be run vs FunnelEnvy.

Experimentation Audiences

FunnelEnvy: Yes (Unlimited Data Combinations)

FunnelEnvy: Yes (Unlimited Data Combinations)

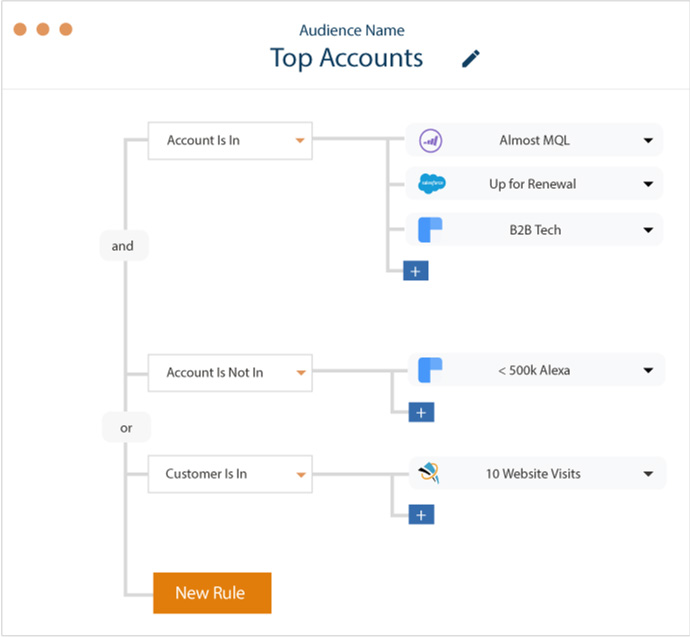

With FunnelEnvy, marketers can create individual or account level audiences using any combination of first and third party data sources. This enables more targeted experiments than is possible in an A/B testing environment.

Audiences can be created by any combination of account or individual level data.

A/B Testing: Custom code needed

A/B Testing: Custom code needed

A/B testing software commonly have integrations to 3rd party data providers for basic audience selection. However, marketers can’t build audiences through essential 1st party data like marketing automation software, Salesforce fields, or user behavior.