Several years ago I was hired to help fix some serious website conversion issues for a B2B SaaS client.

A year earlier the client had redesigned their website pricing page and quickly noticed a significant drop in conversions.

They estimated the redesign cost them approximately $100,000 per month.

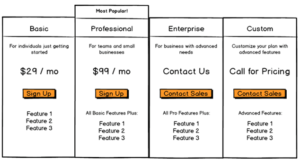

The pricing page itself was quite standard; there were several graduating plan tiers with some self-service options and a sales contact form for the enterprise plans.

Pricing Page

Before coming to us, they accelerated A/B testing on the page. After investing a considerable amount in tools and new team members they had several experiments that showed an increase in the tested goals. Unfortunately, they were not able to find any evidence that these experiments had generated long-term pipeline or revenue impact.

I advised them of our conversion optimization approach – the types of A/B tests that we could run and plan to recapture their lost conversions. The marketing team asked lots of questions, but the VP of Marketing stayed silent. He finally turned to me and said:

“I’m on the hook to increase enterprise pipeline and self-sign up customers by 30% this quarter. What I really want, is a website that speaks to each customer and only shows the unique features, benefits and plans that’s best for them. How do we do that?”

He wanted an order of magnitude better solution. He recognized that his company had to get closer to their customers to win and he wanted a web experience that helped them get there.

I did not think they were ready for that. I told him that we should start with better A/B testing because what he wanted to do would be very complicated, risky and expensive.

Though that felt like the right answer at the time, it is definitely not the right answer today.

Why Website Experimentation Isn’t Enough for B2B

At a time when the web is vital to almost all businesses, rigorous online experiments should be standard operating procedure.

The often cited Harvard Business review quote from the widely distributed article The Surprising Power of Online Experiments supports years of evidence from digital leaders suggesting that high velocity testing is one of the keys to business growth.

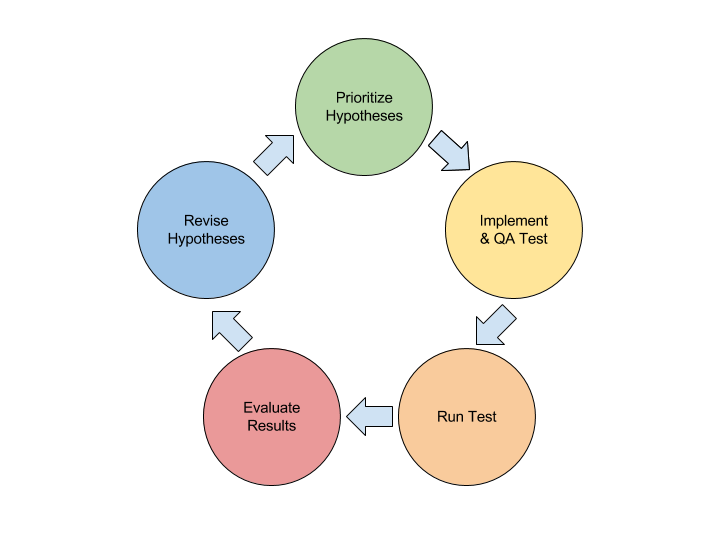

The traditional process of website experimentation involves:

- Gathering Evidence. – “Let’s look at the data to see why we’re losing conversions on this page.

- Forming Hypotheses. – “If we moved the plans higher up on the page we would see more conversions because visitors are not scrolling down.”

- Building and Running Experiments. – “Let’s test a version with the plans higher up on the page.”

- Evaluating Results to Inform the Hypothesis. – “Moving the plans up raised sign ups by 5% but didn’t increase enterprise leads. What if we reworded the benefits of that plan?”

Every conversion optimization practitioner follows some flavor of this methodology.

Typically, within these experiments, traffic is randomly allocated to one or more variations, as well as the control experience. Tests conclude when there is either a statistically significant change in an onsite conversion goal or the test is deemed inconclusive (which happens frequently).

If you have strong, evidence-based hypotheses and are able to experiment quickly, this will work well enough in some cases. Over the years we have applied this approach over thousands of experiments and many clients to generate millions of dollars in return.

However, this is not enough for B2B. Seeking statistically significant outcomes on onsite metrics often means that traditional website experimentation becomes a traffic-based exercise, not necessarily a value-based one. While it may still be good enough for B2C sites (e.g. retail ecommerce, travel), where traffic and revenue are highly correlated, it falls apart in many B2B scenarios.

The Biggest Challenges with B2B Website Experimentation

B2B marketers currently face three main challenges with website experimentation as it is currently practiced:

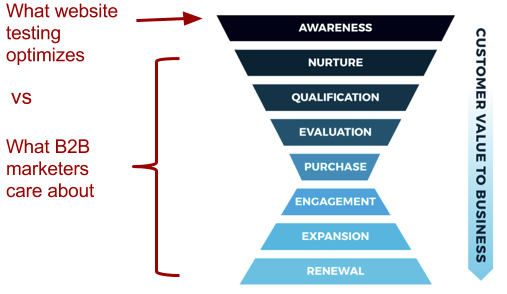

- It does not optimize the KPIs that matter well. – Experimentation does not easily accommodate down-funnel outcomes (revenue pipeline, LTV) or the complexity of B2B traffic and customer journey.

- It is resource-intensive to do right. – Ensuring that you are generating long-term and meaningful business impact from experimentation requires more than just the ability to build and start tests.

- It takes a long time to get results. – Traffic limitations, achieving statistical significance and a linear testing process makes getting results from experimentation a long process.

I. KPIs That Matter

The most important outcome to optimize for is revenue. Ideally, that is the goal we are evaluating experiments against.

In practice, many B2B demand generation marketers are not using revenue as their primary KPI (because it is shared with the sales team), so it is often qualified leads, pipeline opportunities or marketing influenced revenue instead. In a SaaS business it should be recurring revenue (LTV).

If you cannot measure it, then you cannot optimize it. Most testing tools were built for B2C and have real problems measuring anything that happens after a lead is created and further down the funnel, off-website or over a longer period of time.

Many companies spend a great deal of resources on optimizing onsite conversions but make too many assumptions about what happens down funnel. Just because you generate 20% more website form fills does not mean that you are going to see 20% more deals, revenue or LTV.

You can get visibility into down funnel impact through attribution, but in my experience, it tends to be cumbersome and the analysis is done post-hoc (once the experiment is completed), as opposed to being integrated into the testing process.

If you cannot optimize for the KPIs that matter, the effort that the team puts into setting up and managing tests will likely not yield your B2B company true ROI.

Traffic Complexity and Visitor Context

Unlike most B2C, B2B websites have to contend with all sorts of different visitors across multiple dimensions and often with a long and varied customer journey. This customer differentiation results in significantly different motivations, expectations and approaches. Small business end-users might expect a free trial and low priced plan. Enterprise customers often want security and support and expect to speak to sales. Existing customers or free trial users want to know why they should upgrade or purchase a complementary product.

An added source of complexity (especially if you are targeting enterprise), is the need to market and deliver experiences to both accounts and individuals. With over 6 decision makers involved in an enterprise deal, you must be able to speak to both the motivations of the persona/role as well as their account.

One of the easiest ways to come face-to-face with these challenges is to look at the common SaaS pricing page.

Despite my assertions several years ago, the benefits of A/B testing are going to be limited here. You can change the names or colors of the plans or move them up the page, but ultimately, you are going to be stuck optimizing at the margin – testing hypotheses with low potential impact.

As the VP of Marketing wanted to do with us years ago, we would be better off showing the best plan, benefits and next steps to individual visitors based on their role, company and prior history. That requires optimization based on visitor context, commonly known as website personalization.

Rules-based Website Personalization

The current standard for personalization is “rules-based” – marketers define fixed criteria (rules) for audiences and create targeted experiences for these them. B2B audiences are often account, or individual based, such as target industries, accounts, existing customers or job functions.

Unfortunately, website personalization suffers from a lack of adoption and success in the B2B market. 67% of B2B marketers do not use website personalization technology, and only 21% of those that do are satisfied with results (vs 53% for B2C).

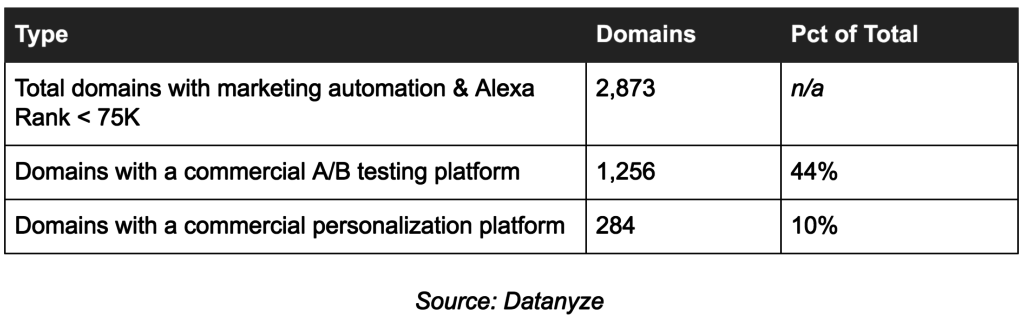

Looking at websites that have a major marketing automation platform and reasonably high traffic, you can see the discrepancy between those using commercial A/B testing vs Personalization:

The much higher percentage of sites that use A/B testing vs personalization, suggests that although the value of experimentation is relatively well understood, marketers have not been able to see the same value from personalization.

What accounts for this?

Marketers who support experimentation subscribe to the idea of gathering evidence to establish causality between website experiences and business improvement. Unfortunately, rules-based personalization makes the resource-investment and time to value challenges involved with doing this even harder.

II. Achieving Long-term Impact from Experimentation is Hard and Resource-intensive

At a minimum, to be able to simply launch and interpret basic experiments, a testing team should have skills in UX, front-end development and analytics – and as it turns out, that is not even enough.

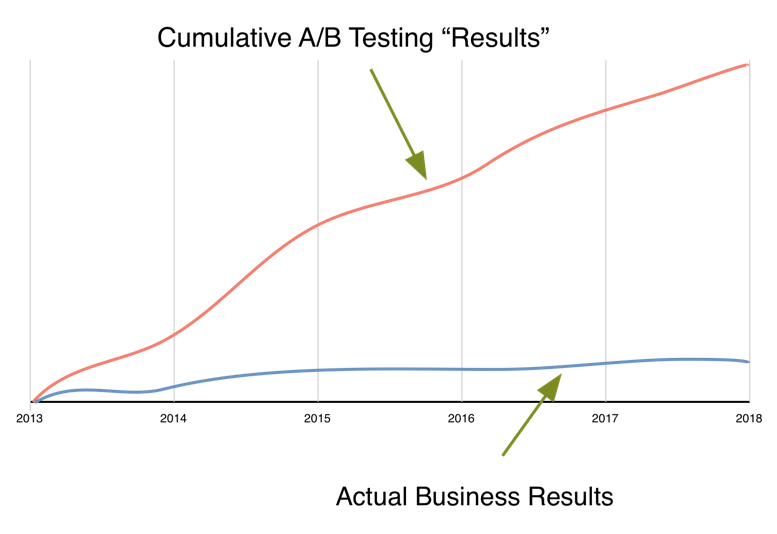

Testing platforms have greatly increased access for anyone to start experiments. However, what most people do not realize is that the majority of ‘winning’ experiments are effectively worthless (80% per Qubit Research) and have no sustainable business impact. The minority that do make an impact tend to be relatively small in magnitude.

It is not uncommon for marketers to string together a series of “winning” experiments (positive, statistically significant change reported by the testing tool) and yet see no long-term impact to the overall conversion rate. This can happen through testing errors or by simply changing business and traffic conditions.

As a result, companies with mature optimization programs will typically also need to invest heavily in statisticians and data scientists to validate and assess the long-term impact of test results.

Rules-based personalization requires even more resources to manage experimentation across multiple segments. It is quite tedious for marketers to set up and manage audience definitions and ensure they stay relevant as data sources and traffic conditions change.

We have worked with large B2C sites with over 50 members on their optimization team. In a high volume transactional site with homogeneous traffic, the investment can be justified. For the B2B CMO, that is a much harder pill to swallow.

III. Experimentation Takes a Long Time

In addition to being resource intensive, getting B2B results (aka revenue) from website testing takes a long time.

In general, B2B websites have less traffic than their B2C counterparts. Traffic does have a significant impact on the speed of your testing, however, for our purposes that is not something I am going to dwell on, as it is relatively well travelled ground.

Of course, you do things to increase traffic, but many of us sell B2B products in specific niches that are not going to have the broad reach of a consumer ecommerce site.

What is more interesting, is why we think traffic is important and the impact that has on the time to get results from testing.

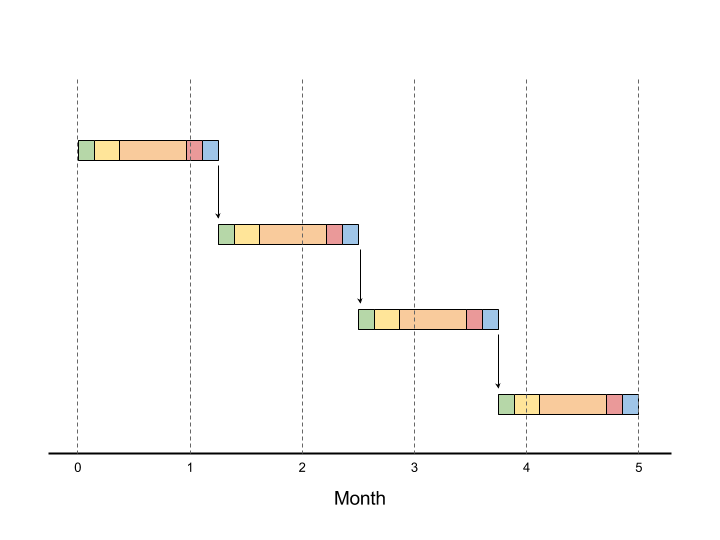

You can wait weeks for significance on an onsite goal (which as I have discussed, has questionable value). The effect that this has on our ability to generate long term outcomes, however, is profound. By nature, A/B testing is a sequential, iterative process, which should be followed deliberately to drive learnings and results.

The consequence of all of this is that you have to wait for tests to be complete and for results to be analyzed and discussed before you have substantive evidence to inform the next hypothesis. Of course, tests are often run in parallel, but for any given set of hypotheses it is essentially a sequential effort that requires learnings be applied linearly.

This inherently linear nature of testing, combined with the time it takes to produce statistically significant results and the low experiment win rate, makes actually getting meaningful results from a B2B testing program a long process.

It is also worth noting that with audience-based personalization you will be dividing traffic across segments and experiments. This means that you will have even less traffic for each individual experiment and it will take even longer for those experiments to reach significance.

Is there Better Way to Improve B2B Website Conversions?

The short answer? Yes.

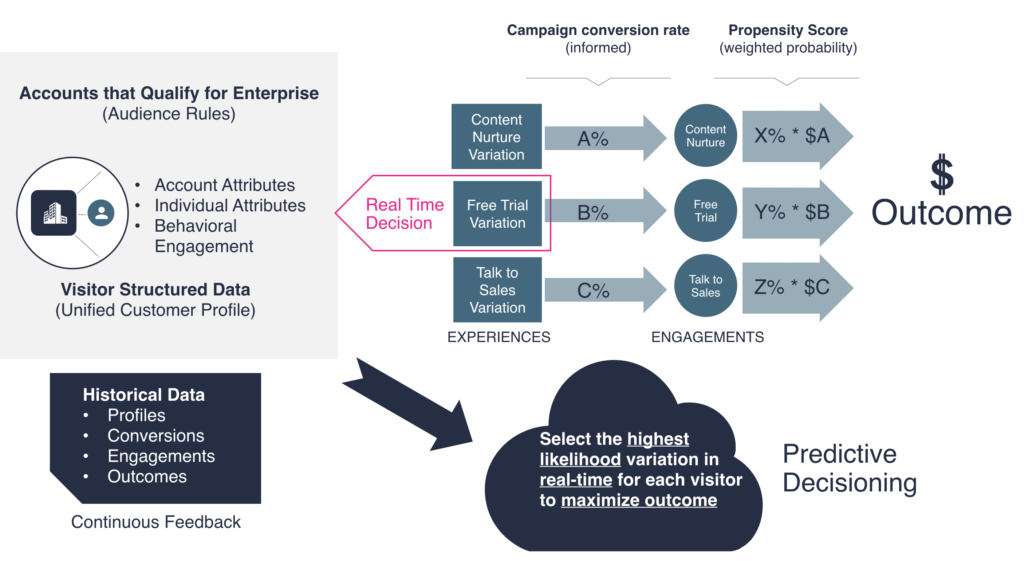

At FunnelEnvy, we believe that with context about the visitor and an understanding of prior outcomes, we can make better decisions than with the randomized testing that websites are using today. We can use algorithms that are learning and improving every decision tree, continuously, to achieve better results with less manual effort from our clients.

Our “experimentation 2.0” solution leverages a real-time prediction model. Predictive models use the past to predict future outcomes based on available signals. If you have ever used predictive lead scoring or been on a travel site and seen “there is an 80% chance this fare will increase in the next 7 days,” then you have seen prediction models in action.

In this case, what we are predicting, is the best website visitor experience that will lead to an optimal outcome. Rather than testing populations in aggregate, we are making experience predictions on a 1:1 basis based on all of the available context and historical outcomes. Our variation scores take into account expected conversion value as well as conversion probability, and we continuously learn from actual outcomes to improve our next predictions.

Ultimately, the quality of these predictions is based on the quality of the signals that we provide the model and the outcomes that we are tracking. By bringing together behavioral, 1st party and 3rd party data we are building a Unified Customer Profile (UCP) for each visitor and letting the algorithm determine which attributes are relevant signals. To ensure that our predictive model is optimizing for the most important outcomes, we incorporate Full Funnel Goal Tracking for individual (MQL, SQL) and account (opportunities, revenue, LTV) outcomes.

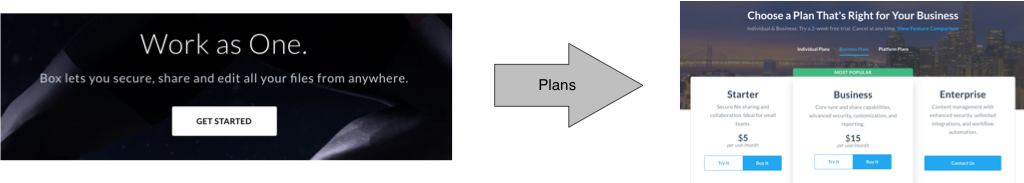

Example: Box’s Homepage Experience

To see what a predictive optimization approach can do, let’s look at a hypothetical example:

Box.com has an above the fold Call to Action (CTA) that takes you to their pricing page. This is a sensible approach when you do not have a lot of context about the visitor because from the pricing page, you can navigate to the right plan and option that is most relevant.

Of course, they are putting a lot of burden on the visitor to make a decision. There are a total of 9 plans and 11 CTAs on that pricing page alone, and not every visitor is ready to select one – many still need to be educated on the solution. We could almost certainly increase conversions if we made that above the fold experience more relevant to a visitor’s motivations.

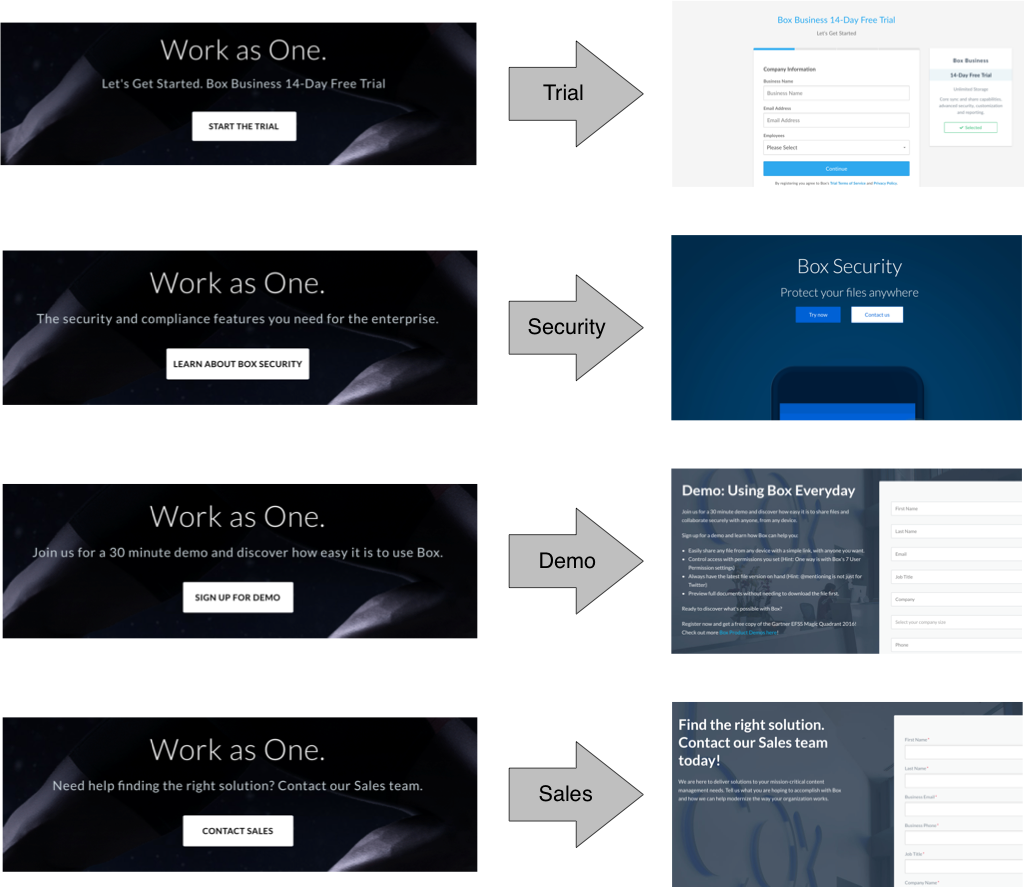

SMB visitors might be ready to start the free trial once they have seen the demo, the enterprise infosec team might be interested in learning about Box’s security features first, customers who are not ready to speak to sales or sign up might benefit from the online demo, and decision makers at enterprise accounts and who are engaged might be ready to fill out the sales form.

Modifying the homepage sub-headline and CTA to accommodate these experiences could look something like the image below. Note that they take you down completely different visitor journeys, something you would never do with a traditional A/B test.

If we had context about the visitor and historical data we could predict the highest probability experience that would lead to both onsite conversion as well as down funnel success. The prediction would be made on a 1:1 basis as the model determines which attributes are relevant signals.

Finally, because we are automating the learning and prediction model, this would be no more difficult than adding variations to an A/B test, and far simpler and with higher precision than rules-based personalization. The team would be alleviated from having to do the analytical heavy lifting, new variations could be added over time and changing conditions would automatically be incorporated into the model.

Conclusion

Achieving “10X” improvements in today’s very crowded B2B marketplace requires shifts in approach, process and technology. Our ability to get closer to customers is going to depend on better experiences that you can deliver to them, which makes the rapid application of validated learnings that much more important.

“Experimentation 1.0” approaches gave human marketers the important ability to test, measure and learn, but the application of these in a B2B context raises some significant obstacles to realizing ROI.

As marketers, we should not settle for secondary indicators of success or delivering subpar experiences. Optimizing for a download or form fill and just assuming that is going to translate into revenue is not enough anymore. Understand your complex traffic and customer journey realities to design better experiences that maximize meaningful results, instead of trying to squeeze more out of testing button colors or hero images.

Finally, B2B marketers should no longer wait for B2C oriented experimentation platforms to adopt B2B feature sets. “Experimentation 2.0” will overcome our human limitations to let us realize radically better results with much lower investment.

New platforms that prioritize relevant data and take advantage of machine learning at scale will alleviate the limitations of A/B testing and rules-based personalization. Solutions built on these can augment and inform the marketers’ creative ability to engage and convert customers at a scale that manual experimentation cannot approach.

This post originally appeared on LinkedIn.